In our previous work [1, 2, 3], we compared first-person-view lifelog images – e.g., images taken using Narrative Clip devices – with third-person-view lifelog images – e.g., images captured by fixed infrastructure cameras. First-person view images usually provide a very particular vantage point, and as such may miss many things: camera lenses may get covered by clothes or hair, or may simply face the wrong way due to the way they are “mounted” on the body (e.g., with a clip). Even if an unobstructed view can be had, a first-person-view may only show a very small part of the scene, e.g., potentially never showing a person that sits right next to us. Images from fixed infrastructure cameras can compensate for such shortage: their high vantage point usually allows them to captures comprehensive scenes, completely unobstructed. Alternatively, a first-person-view image from another person may equally offer an interesting alternative to my own capture. These considerations prompted us to investigate the best way to combine first-person-view and third-person-view images in RECALL to reconstruct a better representation of a previous experience.

Let’s consider the following high-level scenario that illustrates this vision:

- Morning walk to office

(request access to infrastructure cameras capturing our walk) - Stop at a coffee shop for a quick drink

(request access to images captured by other people and the ones captured from the in-shop infrastructure cameras) - Enter the department building and meet a colleague in hallway for a chat

(request access to colleague’s captured data showing ourselves and access hallways cams) - Have a work meeting

(access in-room sensor equipment – cam, mic, board contents – plus data captured from other members)

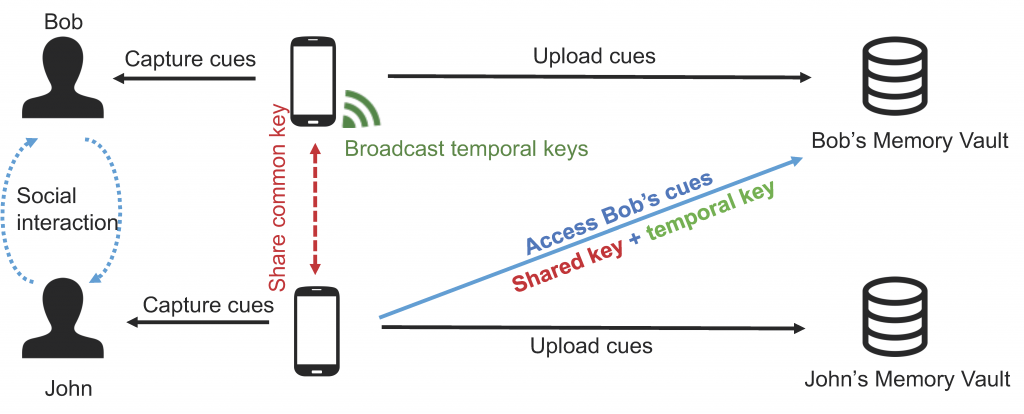

We recently begun work on a system that would enable the seamless sharing of captured lifelog data in such a scenario. Figure 1 gives an overview of the envisioned data flows in the system. The key goal is of course to minimize privacy risks by sharing only with co-located people, while at the same time limit the ability of others to track us.

The current prototype runs as an app on the life-logger’s smartphone and indicates its ability to share captured lifelog data by sending out periodic announcements using short-range wireless protocols (e.g., Bluetooth Low Energy). Such announcements contain information on how to access the shared data with the help of a frequently updated “access token”. Access tokens are needed to actually find data at a known base address. Their short lifetime means that as soon as others leaves the range of our smartphone’s short range wireless protocol, they are unable to access any of the data captured at a later time, as this data will use different access tokens.

Upon encountering another client’s announcement, the app will run a key exchange protocol to swap its daily public key with the other client. After a certain minimum dwell time has passed and the other client is still in range, the app will add the client’s public key to the list of authorized clients that can access its (otherwise encrypted) captured data. By securing the data in this way, we can ensure that it is not enough to simply capture our announcements, but that clients need to be in close enough proximity for a sufficiently long duration in order to enable such data exchange.

In order to allow clients to choose where to host their data, the known base address is in fact not returning the actual captured data, but simply does a URL redirect. However, a personal URL may act as a quasi-identifier that may allow others to track us through our announcements. To counter this sort of attack, the URLs returned by the core redirection services are encrypted with each peers’ public key (i.e., just like the captured data itself). Only if a peer did manage to establish a connection with our app, and only for the duration of this peer being in range, our app will encrypt the actual access URLs with the peer’s public key.

Last but not least, the app does not send out announcements all the time. Instead, it uses a “social detector” to only send out announcements if it thinks that a peer is around. One way such a social detector could be implemented would be to sample the audio sensor in order to detect a discussion. Alternatively, the captured images could be analysed to detect if the owner is facing another person. Our current prototype focuses on simple audio detection, yet we are also exploring other options. Obviously, fixed infrastructure cameras could simply always send out announcements.

We have finished work on a first prototype and are now investigating how to build an effective social detector. Next will be to harden the cryptographic primitives involved, followed by both user testing and performance evaluations. There are still some open challenges and issues regarding the captured data protection and the usability of this system that we need to consider, e.g.:

- How to pre-process captured data from privacy issues and subsequently don’t offer for sharing the private ones?

- How to control use of data, i.e., what happens once another person receives access to parts of a user’s lifelog?

- Can we build tangible interfaces that support existing social protocols to control both capture and the sharing of captured data?

References:

[1] Clinch, S., Mikusz, M., and Davies, N.: “Understanding Interactions Between Multiple Wearable Cameras for Personal Memory Capture”. In: The Sixteenth International Workshop on Mobile Computing Systems and Applications, See http://www.hotmobile.org/2015/papers/posters/Clinch.pdf

[2] Clinch, S., Davies, N.A.J., Mikusz, M., Metzger, P., Langheinrich, M., Schmidt, A., and Ward, G.: “Collecting shared experiences through lifelogging: lessons learned”. In: IEEE Pervasive Computing. Volume 15, Issue 01/2016, p. 58-67. DOI: 10.1109/MPRV.2016.6

[3] Clinch, S., Metzger, P., and Davies, N.: “Lifelogging for ’Observer’ View Memories: An Infrastructure Approach”. In: Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct Publication, ser. UbiComp ’14 Adjunct. New York, NY, USA: ACM, 2014, pp. 1397–1404. DOI: 10.1145/2638728.2641721